Emerging Trends In Computational Fluid Dynamics

Emerging Trends In Computational Fluid Dynamics

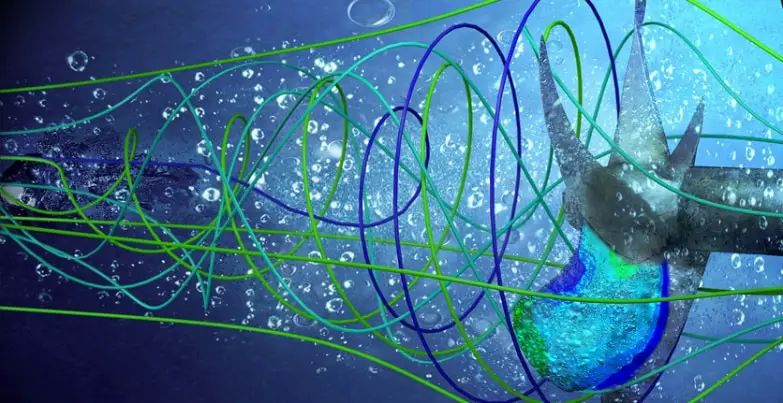

The landscape of Computational Fluid Dynamics (CFD) software has undergone a profound transformation in recent decades.

In the past, CFD was characterized by expensive, resource-intensive proprietary software requiring extensive training to operate effectively. This limited its usage to large organizations with the financial means to fully support and integrate such software.

However, the contemporary CFD environment has witnessed significant changes due to factors such as high-performance computing, open-source licensing models, and the utilization of cloud resources.

These developments have made CFD significantly more accessible. Companies can now easily scale their simulations, conducting multiple cases concurrently while incurring significantly lower costs.

Simultaneously, advancements in solver capabilities, turbulence models, and automated mesh generation have enhanced the robustness and accuracy of CFD results.

This increased flexibility has facilitated the adoption of CFD by a broader spectrum of companies and industries. According to a recent report, the global CFD market in 2022 was valued at $2 billion, with an 8.3% growth rate expected to reach $3.8 billion by 2030.

In comparison, the market for cloud-based CFD is projected to grow by 9.4%, reaching a value of $2.4 billion by 2030.

As more companies continue to harness the potential of CFD, the range of applications for this software is expanding exponentially.

Seeing how CFD has become an important part of every industry, its new trends hold many potential to solve problems.

Before we jump into the trends, if you need to know what is computation fluid dynamics software is all about, visit the page.

Now that is taken care of, let’s start.

Emerging Trends In Computational Fluid Dynamics

Computational Fluid Dynamics (CFD) is a multidisciplinary field that has revolutionized how engineers and scientists understand, simulate, and optimize fluid flow in various applications.

Over the years, CFD has witnessed significant advancements, and emerging trends are poised to shape its future.

Below, we’ll explore some of the most exciting trends in CFD, offering a glimpse into how this field continues to evolve.

High-Performance Computing (HPC)

High-Performance Computing (HPC) is a specialized field of computing that focuses on using powerful computer systems and advanced techniques to process and solve complex problems or tasks that require exceptional computational power and speed.

HPC systems are designed to handle extremely large datasets and perform calculations at speeds well beyond what typical desktop or server computers can achieve.

Here’s a detailed explanation of HPC:

Key Characteristics of High-Performance Computing

- Specialized Hardware: HPC systems consist of clusters of high-end servers or supercomputers interconnected by high-speed networks. These servers are equipped with advanced processors, such as multi-core CPUs and Graphics Processing Units (GPUs), that can perform parallel processing and execute multiple tasks simultaneously.

- Parallel Processing: One of the core principles of HPC is parallelism. Instead of tackling a complex problem as a single, monolithic task, it’s divided into smaller, more manageable subtasks that can be processed concurrently. This approach significantly accelerates calculations, making HPC suitable for tasks that require substantial computation.

- Speed and Performance: HPC systems are optimized for maximum performance. They can execute calculations at incredibly high speeds, making them ideal for tasks like scientific simulations, weather forecasting, and data analysis in fields like genomics and artificial intelligence.

Artificial Intelligence (AI) and Machine Learning

Artificial Intelligence (AI) and Machine Learning are closely related fields that focus on developing computer systems capable of performing tasks that typically require human intelligence. They are at the forefront of technological advancements and have applications across various industries. Here’s an explanation of both AI and Machine Learning:

Artificial Intelligence (AI)

AI is a broad field of computer science that aims to create machines and software with human-like intelligence and the ability to perform tasks that typically require human intelligence. These tasks include reasoning, problem-solving, learning, understanding natural language, recognizing patterns, and making decisions.

Key components of AI

- Expert Systems: Expert systems are AI programs designed to mimic the decision-making abilities of a human expert in a particular domain. They use knowledge bases and inference engines to provide solutions to complex problems.

- Natural Language Processing (NLP): It focuses on the interaction between humans and computers through natural language. It enables computers to understand, interpret, and generate human language.

- Computer Vision: This field allows machines to interpret and understand visual information from the world, such as images and videos. It’s crucial for facial recognition, object detection, and autonomous vehicles.

- Robotics: AI is pivotal in developing intelligent robots and autonomous systems that can perform tasks in various domains, from manufacturing to healthcare.

- Machine Learning: Machine learning is a subset of AI that focuses on developing algorithms that enable computers to learn from and make predictions or decisions based on data.

Machine Learning (ML)

ML is a subfield of AI that concentrates on creating algorithms and models that allow computers to learn from and make predictions or decisions based on data. Unlike traditional programming, where rules and instructions are explicitly coded, the computer learns and improves its performance in machine learning through experiences and data.

Key components of Machine Learning

- Supervised Learning: A model is trained on a labeled dataset, meaning it learns from input-output pairs. The goal is to make predictions or classify new, unseen data accurately.

- Unsupervised Learning: Unsupervised learning involves training a model on unlabeled data to discover hidden patterns, group similar data points, or reduce the dimensionality of the data.

- Deep Learning: Deep learning is a subset of machine learning involving many layers of neural networks (deep neural networks). It has been highly successful in tasks like image and speech recognition.

Reduced-Order Modeling (ROM)

Reduced-Order Modeling (ROM) is a pivotal computational technique in simplifying complex high-dimensional systems, particularly in fields such as engineering, physics, and fluid dynamics.

At its core, ROM is about creating efficient and manageable models of intricate systems by significantly reducing their dimensionality. This process allows researchers and engineers to perform simulations, optimizations, and control tasks with far less computational burden, making it a valuable tool in many applications.

In high-dimensional systems, which often involve many variables or degrees of freedom, traditional simulations can be exceedingly resource-intensive, requiring substantial computational power and time.

ROM addresses this challenge by distilling the essential information from these complex systems and then building a simplified model that retains the critical aspects of their behavior. This approach reduces computational demands and enables real-time control and decision-making.

The ROM process typically involves several key steps. Initially, data is collected from simulations or observations of the high-dimensional system to capture its behavior.

Next, various mathematical techniques, such as Proper Orthogonal Decomposition (POD), Principal Component Analysis (PCA), or Karhunen-Loève Expansion (KLE), are applied to extract the most influential modes or patterns from the data. These modes serve as the building blocks for the reduced-order model.

Subsequently, the ROM is constructed, often represented by equations governing the system’s behavior within the reduced subspace. To ensure its accuracy, the ROM is validated, often using techniques like cross-validation or error analysis.

The advantages of Reduced-Order Modeling are significant. Computational efficiency is the most prominent benefit, drastically reducing the computational resources required for simulations. This makes it possible to conduct simulations in a fraction of the time, enabling more rapid problem-solving.

Additionally, ROM is instrumental in real-time applications, particularly in aerospace, automotive, and manufacturing industries, where timely decision-making and control are essential. Compared to the original high-dimensional data, the reduced storage requirements for ROM models further enhance its practicality, making it suitable for large-scale applications.

Multiphysics and Multiscale Simulations

Multiphysics and multiscale simulations are advanced computational techniques that have revolutionized how scientists and engineers study and understand complex physical phenomena in various fields, including physics, engineering, materials science, and biology.

These simulation methods allow researchers to model and analyze the interactions between multiple physical processes (multiphysics) and phenomena occurring at different scales (multiscale).

Together, they provide a comprehensive view of intricate systems and phenomena that are otherwise challenging to explore.

In multiphysics simulations, scientists and engineers investigate systems where different physical processes interact. These processes include heat transfer, fluid flow, structural mechanics, electromagnetics, and more.

The interactions between these processes are often highly coupled, and understanding their combined effects is crucial for solving real-world problems. Multiphysics simulations enable researchers to explore how changes in one process impact others, leading to more accurate and holistic results.

For example, multiphysics simulations are essential in designing electronic devices, where heat dissipation and electromagnetic interactions play vital roles.

On the other hand, multiscale simulations deal with systems that exhibit phenomena at multiple levels of scale. These scales can range from the atomic and molecular levels to macroscopic levels.

Multiscale simulations allow researchers to bridge the gap between microscale and macroscale behaviors. For instance, in materials science, understanding how individual atoms or molecules’ behavior influences a material’s overall properties is essential.

Multiscale simulations help track and analyze these interactions, aiding in developing novel materials with tailored properties.

Multiphysics and multiscale simulations have become increasingly critical in addressing complex challenges. They are particularly valuable in biomedical research, where processes at cellular, tissue, and organ levels need to be studied simultaneously.

In climate modeling, understanding the interactions between the atmosphere, oceans, and land is crucial for accurately predicting weather patterns and climate change.

Additionally, multiphysics and multiscale simulations are indispensable for designing and optimizing novel technologies in nanotechnology, where materials and devices operate at the nanoscale.

Conclusion

Computational Fluid Dynamics is at the forefront of technological advancements, with emerging trends that promise to reshape how fluid flow is understood and utilized in various applications.

From integrating AI and machine learning to adopting quantum computing, CFD is continually evolving to meet the needs of industry, research, and environmental challenges.

As these trends develop, we can anticipate more accurate, efficient, and accessible CFD simulations that drive innovation across multiple sectors.

Lucas Noah is a tech-savvy writer with a solid academic foundation, holding a Bachelor of Information Technology (BIT) degree. His expertise in the IT field has paved the way for a flourishing writing career, where he currently contributes to the online presence... Read more